Abby Webb

Head of Search & Content

Abby heads up our SEO and content campaigns, with a strong background in copywriting, content and paid search marketing.

For your site to appear on Google Search’s results, it needs to be indexed. There are several factors that may be slowing down how long it takes Google to index your site.

You’ve recently launched your new website or you’ve updated one of your pages, but when you Google it, it doesn’t appear among the search results. This could mean that your site or page has not been indexed by Google yet.

Indexing is the process by which Google adds new or updated pages to its index (or library) of possible search results. Google suggests that you allow at least a week for a new site or page to be indexed, but it can take Google weeks, even months to index a site or page.

The total time can be anywhere from a day or two to a few weeks, typically, depending on many factors.

Google

However, if you think your site or page isn’t indexed but want to make sure it isn’t just low down in the results, you can add the “site:” operator before searching the specific URL in Google Search. If it doesn’t show up, it isn’t indexed. You can also inspect the URL in Google Search Console for detailed insights on its status.

Here are the main factors that can affect how long it takes for Google to index your site, and what you can do to improve your chances of indexing.

First off, it’s helpful to know how Google does its work. In order to index new sites, Google uses GoogleBot, specialist web spider software that crawls the internet by following hyperlinks to find new pages.

Google doesn’t index all of a site at once. It’s possible, for example, that when launching a new site, the homepage will be indexed before other pages. You can also use Google Search Console to manually request indexing for a new or updated page, but this does not necessarily mean that your page will be indexed faster. Take it from Google’s John Mueller:

If you’re launching a brand new website for your business, then follow these steps:

The number of pages on your site can affect how long it takes GoogleBot to crawl your site, which can impact how long it takes for new pages to be indexed or for your site to be indexed if it’s been newly launched or relaunched.

The fix: Be patient!

The speed of your site can also affect how long it takes for Google to index it. GoogleBot aims to crawl as many pages on a site as it can without overwhelming that site’s bandwidth. If your site is already slow and easily overwhelmed, GoogleBot will slow down or stop crawling it in order to prevent the crawling process from affecting the site’s performance.

The fix: Speak to a developer to find out what you can do to make your server and website faster to prevent overloading GoogleBot.

Orphan pages are pages which aren’t linked to from any other pages on your site. Because GoogleBot crawls by following hyperlinks, this can make it difficult for Google to find a page and index it.

The fix: Ensure all pages are linked to elsewhere on your website. A tool like SEMRush, Screaming Frog or Ahrefs can help find these pages. Ideally, all new pages should have a prominent link on the homepage.

If you’re building your site using services like WordPress, it’s worth checking that you haven’t accidentally checked a box that is preventing or disencouraging Google from crawling the site. In WordPress’ settings, for example, you might have ticked “Discourage search engines from indexing this site” under “Search Engine Visibility.” This is usually used for business’ campaign-specific pages or pages which they otherwise don’t want anyone to be able to see.

The fix: Check (or un-check!) all visibility settings before going live.

Duplicate content on your site can slow down GoogleBot’s crawling and potentially your site itself. It’s always worth doing regular maintenance to make sure that you don’t have duplicate pages unnecessarily.

The fix: As simple as the problem. Delete any duplicate pages on your site if it duplicate content wasn’t intentional or use a canonical tag to tell the crawler that the duplicate content was intentional, and which page it’s copied from. A developer can help you with this.

Remember, just because you submit your sitemap or request indexing in Google Search Console, there’s no guarantee that Google will index your website. However, following the advice above will improve your chances of indexing.

This also doesn’t guarantee you’ll rank highly in the search results. When it comes to where you’ll rank in the search results once you’re indexed, read these articles:

Head of Search & Content

Abby heads up our SEO and content campaigns, with a strong background in copywriting, content and paid search marketing.

View my other articles and opinion pieces below

Google’s AI search demands higher standards for YMYL content. Learn how to keep your financial, legal or health advice visible, trusted and compliant.

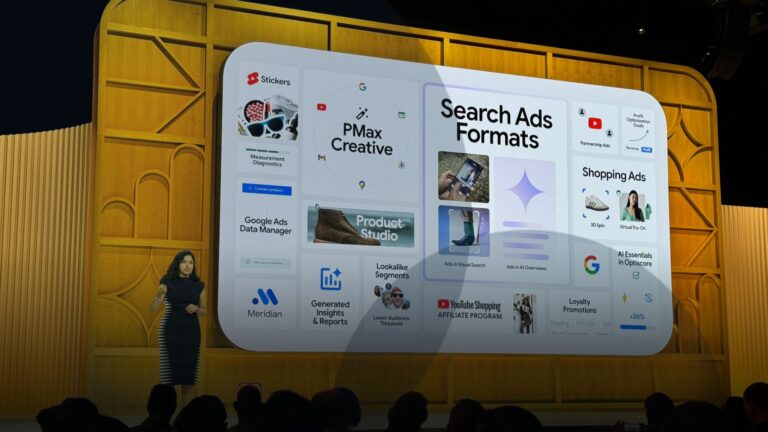

At Google Marketing Live 2025, the focus was clear: AI is already changing the way people search, and as a result, it’s changing what brands need to do to show up in search results. Here’s what you need to know – and what it means for your marketing.

Love it or hate it, everyone’s seen it. Google’s AI Overviews are changing the way your search results appear. Now, AI-generated summaries will often answer user questions before the usual list of site links we’ve come to expect. In fact, 47% of Google Search results now include an AI Overview – at least, according to AI […]

This short guide will teach you how to track your marketing campaigns using UTM parameters. Also referred to as a custom URL, a UTM tag is a customised snippet of text (called a parameter) that is added at the end of a website address. This UTM tag allows you to track and identify the traffic […]